Bio

I graduated with a B.S in Mathematics and M.S in Computer Science from Stanford University where I concentrated my studies within probability and machine learning. I was fortunate to be advised by Amir Dembo for my undergraduate degree and Christopher Ré for my graduate degree.

I now work as a Research Scientist at NVIDIA on the foundation model training team. I primarily focus on developing new large language models (LLMs) and augmenting their abilities via continued training.

// In the past, I've:

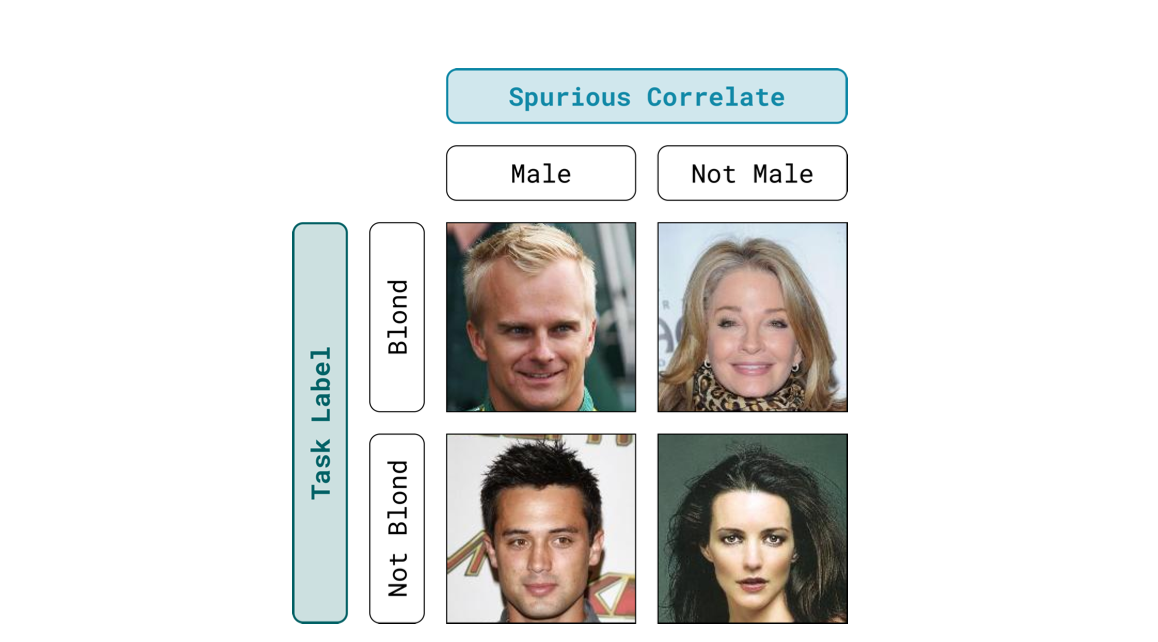

Worked with Tatsu Hashimoto on improving group robusntess of machine learning models. Current models achieve high average performance but can incur high error on certain groups of rare and atypical examples. We developed a new framework which allows for models to obtain better worst-group performance on tasks.

Worked as a teaching assistant for CS 234: Reinforcement Learning where I had the wonderful opportunity to help introduce the exciting field of reinforcement learning to students. Teaching has always been a passion of mine and it was gratifying to help aid in the educational journey of others.

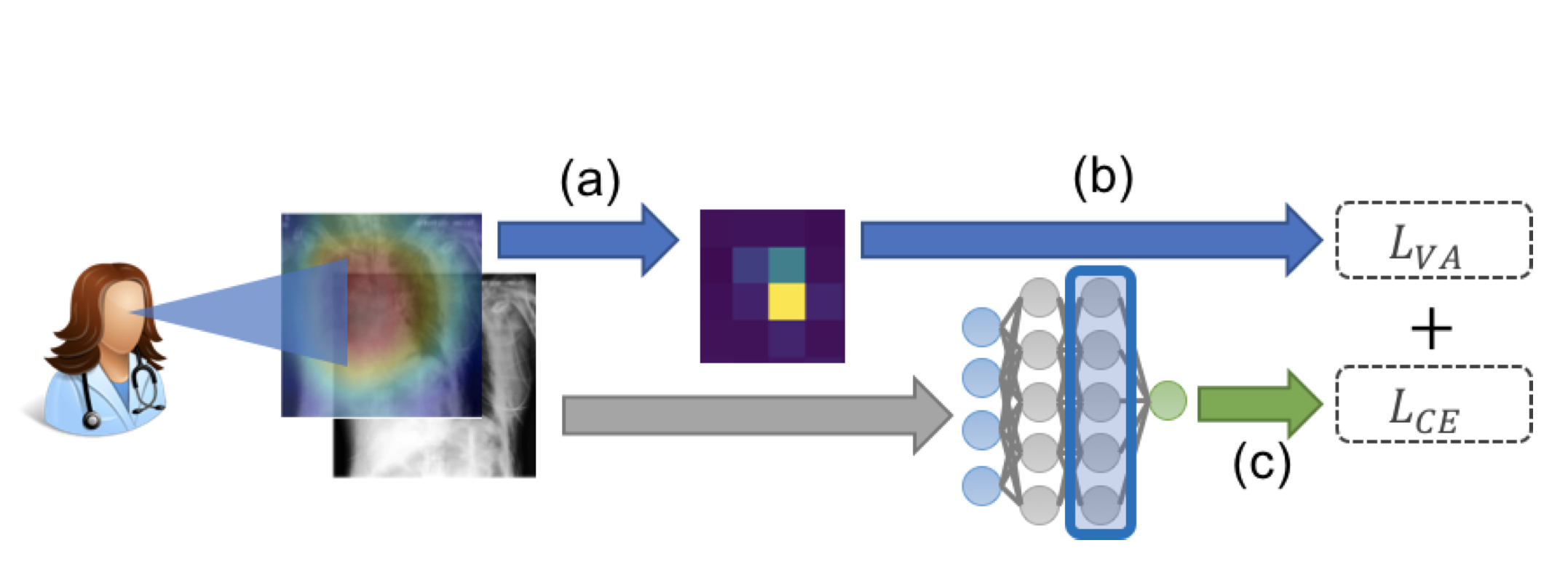

Researched in Chris Re's Lab at Stanford where I worked with Khaled Saab. We worked on making use of passively observed human signals, such as one's gaze, to learn better representations of tasks and help train more robust, generalizable deep learning models for medical image classification.

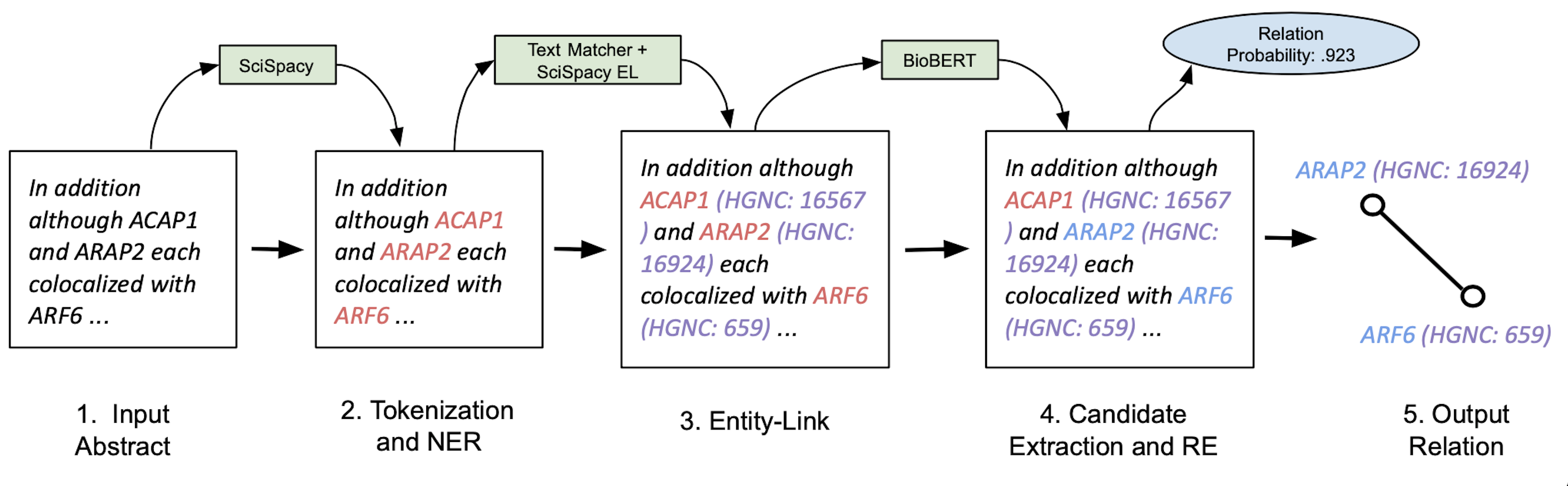

Worked as a Machine Learning Engineer and the first Product Manager for OccamzRazor. OccamzRazor is an early stage company developing a biomedical knoweldge graph which aims to advance understanding of drug efficacy and provide novel treatments for diseases. My initial focus of work was on expanding out their natural langauge processing pipeline by researching and engineering new architectures for biomedical information extraction. Later, I focused on expanding the product platform from just repurposing known drugs to the broader set of small molecules that actively interact with any druggable protein.

Worked as a quantitative trading intern at IMC Trading on the Small Index Options Desk. I developed new trading strategies to improve performance on US Options Auctions by leveraging information regarding market sentiment.

Researched with David Chin at UMass Amherst where we investigated the intersection of quantum and classical machine learning. In particuar, we looked to see whether quantum models could help us better assess the quality of care provided by hospitals.

Worked with David Doty at UC Davis where we built a domain specific language that allowed researchers to implement complex theoretical models in the field of algorithmic self assembly.

Projects

Publications and Written Pieces

The Importance of Background Information for Out of Distribution Generalization PDF

Jupinder Parmar, Khaled Saab, Brian Pogatchnik, Daniel Rubin, Christopher Ré

Workshop on Spurious Correlations, Invariance, and Stability at International Conference of Machine Learning (ICML 2022)

Observational Supervision for Medical Image Classification using Gaze Data PDF

Khaled Saab, Sarah Hooper, Nimit Sohoni, Jupinder Parmar, Brian Pogatchnik, Sen Wu, Jaredd Dunnmon, Hongyang Zhang, Daniel Rubin, Christopher Ré

Medical Image Computing and Computer Assisted Intervention (MICCAI 2021)

Biomedical Information Extraction For Disease Gene Prioritization PDF

Jupinder Parmar, William Koehler, Martin Bringmann, Katharina Sophia Volz, Berk Kapicioglu

Knowledge Representation and Reasoning Meets Machine Learning Workshop at Neural Information Processing Systems (NeurIPS 2020)

A Formulation of a Matrix Sparsity Approach for the Quantum Ordered Search Algorithm PDF

Jupinder Parmar, Saarim Rahman, Jesse Thiara

International Journal of Quantum Information (2017)

Resume

-

Nvidia September 2022 - CurrentResearch Scientist

Foundation Language Models -

OccamzRazor September 2021 - June 2022Product Manager

Expanded the Existing Product Platform -

IMC Trading Summer 2021Quantitative Trading Intern

Developed Trading Strategies for U.S ETF Option Auctions -

Stanford University 2020 - 2022Graduate

M.S. in Computer Science. Advised by Christopher Ré. -

OccamzRazor March 2020 - June 2021Machine Learning Engineer

Worked on NLP and Graph Learning -

Stanford AI Lab November 2019 - nowResearcher

Working on Representation Learning and Domain Generalization -

SAS Institute Summer 2019Software Engineer Intern

Used Machine Learning to Predict System Failures -

Stanford University 2018 - 2022Undergraduate

B.S. in Mathematics. Advised by Amir Dembo.

Acknowledgement

This website draws design inspiration from Tatsunori Hashimoto and Jason Zhao.